The goal of computer architecture is to ensure the reliability of a computer system’s performance, efficiency, cost, and dependability. The example of the tutorial set form may be used to demonstrate how the competing elements are most likely balanced. More sophisticated instruction units assist programmers to assemble greater space-efficient programs because a single instruction may also transmit multiple higher-degree abstractions. Longer and greater sophisticated instructions, on the opposite hand, take longer to decode and are much more likely to be high-priced to utilize efficiently. The greater the complexity of a large training set, the greater the danger of unreliability when commands interact in unexpected ways.

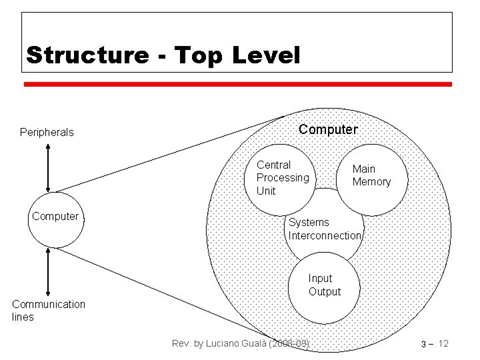

Fig.Link: https://slidetodoc.com/presentation_image_h/77dbbfaa75726653f0867aeea5a04b26/image-12.jpg

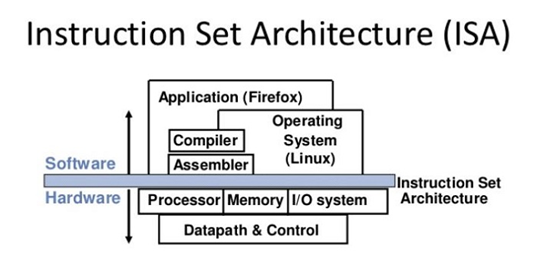

Instruction Set Architecture:

The interface between the system’s software and hardware, and the machine’s thinking from the programmer’s perspective, is referred to as ISA. Computers are incapable of comprehending high-level programming languages such as Java, C++, and the vast majority of commonly used programming languages. When instructions are quantitatively expressed, usually as binary numbers, a processor recognizes them as high-quality. A processor recognizes commands as great as they can be quantitatively represented, often as binary numbers. A processor recognizes commands valid as long as they are numerically represented, often as binary integers. The names may be determined using an assembler, which is a software development tool. An assembler is a piece of computer software that transforms the ISA from a human-readable to a computer-readable format. Disassemblers are also widely accessible and are frequently used in debuggers and software applications to isolate and correct problems in binary software. The robustness and efficacy of ISAs vary. A specific ISA strikes a balance between programmer convenience, code size, system value to recognize instructions, and system speed. Memory form affects both how instructions interact with memory and how memory interacts with itself. For the period of layout emulation, emulators can execute programs written in a planned training set at some indeterminate point in the future. Modern emulators can analyze an ISA’s size, cost, and speed to determine if it meets its objectives.

Fig. Link: https://www.designnews.com/sites/cet.com/files/ISA-700W_0.jpg

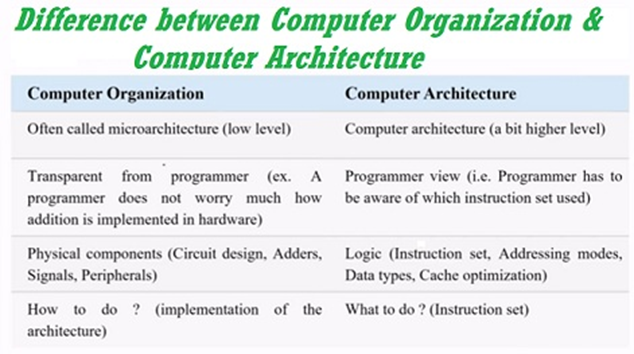

Computer Organization:

Computer administration aids in the optimization of performance-primarily based completely common goods. CPU processing power, for example, must be considered by software program writers. They may try to govern this technique so that it achieves a good common average overall performance for the least amount of money. This may need an in-depth examination of the computer’s structure as well. The demand for a processor for a certain task will also be useful assistance for computer organization. While multimedia activities may need quick access to reports, digital computer architectures may necessitate several pauses. Specific sports may demand the use of more powerful components. The organization and usefulness of a computer also have an impact on power consumption and processing cost.

Implementation can be divided into many steps:

- The circuits required on the logic-gate level are constructed using logic implementation.

- Circuit implementation generates transistor-stage designs of key components as well as certain bigger blocks that can be used on the logic-gate stage or possibly on the physical stage if the situation calls for it.

- The introduction of physiological circuitry is required for physical implementation. The various circuit components are put in a chip format or on a board, and wires are constructed to connect them.

- Design validation analyses the computer as a whole to see if it performs properly in all scenarios and at all times. Logic emulators are utilized to confirm the layout on the logic level when the layout validation operation begins.

The figure above depicts the distinction between computer enterprise and architecture.

Design Expectancies:

The total performance of a modern system is commonly expressed in terms of IPC, which analyses the architecture’s overall performance at any clock frequency; a higher IPC charge suggests a quicker system. IPC levels on earlier computer systems might be as low as 0.1, although modern CPUs can also reach about 1. Superscalar processors may get three to five IPC by performing certain instructions every clock cycle. Counting machine-language instructions can be deceptive due to the fact that they do varying amounts of work in different ISAs. In common parlance, an instruction is a unit of size that is typically structured entirely at the overall performance of the VAX computer architecture, rather than a reliance on the ISA’s machine-language directives. Other factors that influence speed include the number of realistic gadgets utilized, bus speeds, memory access, and the kind and sequencing of instructions inside the programs. Latency and throughput are the most massive types of speeds. Latency is the amount of time that elapses between the start and end of an operation. Throughput is the number of tasks accomplished in a given period of time. Interrupt latency is the system’s guaranteed maximum response time to an electrical event. Benchmarking takes all of these factors into account by assessing the time it takes a computer to run through a series of test programs. While benchmarking notable strengths is still useful, it should no longer be utilized to choose a laptop. The measuring system frequently divides into first-rate measures. One computer, for example, maybe faster at dealing with scientific applications than another at creating video games. Furthermore, designers may furthermore be looking to incorporate special features into their gadgets, whether that not or now not via hardware or software, that permit a positive benchmark to run quickly but do now not provide comparable benefits to bigger activities. Another critical concern in today’s computer architectures is a strong performance. In most cases, higher strength performance is surrendered in exchange for slower speed or cheaper cost. When it comes to power consumption, MIPS/W is a standard unit of measurement in computer architecture (masses of lots of commands consistent with 2d consistent with watt).

Modern circuits use significantly less electricity consistent with transistors as the number of transistors compatible with chips rises. This is because each transistor in a cutting-edge semiconductor has its own power source, necessitating the development of more power channels. On the other side, the number of transistors per chip is increasing at a slower rate. As a result, power overall performance is becoming just as vital, if not more critical, than cramming more transistors onto a single chip.

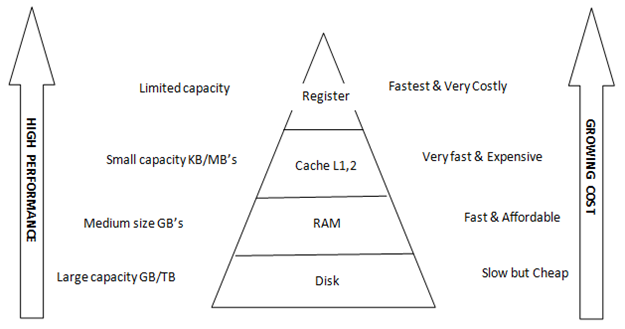

Fig. Link: https://blogs.sap.com/wp-content/uploads/2011/12/mh2_75718.png

Increases in clock frequency have been significantly slower in recent years when compared to benefits from power reductions. This is because Moore’s Law has expired, and people want longer battery life and smaller cellular technologies. The significant reductions in power consumption, as much as 50%, announced by Intel with the release of the Haswell microarchitecture, wherein they dropped their power consumption benchmark from 30 to 40 watts all the way down to 10-20 watts, demonstrate this shift in focus from higher clock charges to power consumption and miniaturization.