A web crawler, insect, or web search tool bot downloads and lists content from everywhere the Internet. The objective of such a bot is to realize what (pretty much) every site page on the web is about, so the data can be recovered when it’s required. They’re classified “web crawlers” since crawling is the specialized term for naturally getting to a site and acquiring information through a software program.

These bots are quite often worked via web search tools. By applying a search algorithm to the information gathered by web crawlers, web search tools can give important connections in light of client search questions, producing the rundown of site pages that appear after a client types an inquiry into Google or Bing (or another web index).

A web crawler bot resembles somebody who goes through every one of the books in a disorganized library and assembles a card catalog with the goal that any individual who visits the library can rapidly and effectively discover the data they need. To help order and sort the library’s books by subject, the organiser will peruse the title, outline, and a portion of the inward content of each book to sort out what it’s the issue here.

However, in contrast to a library, the Internet isn’t made out of actual heaps of books, and that makes it difficult to tell whether all the important data has been ordered appropriately, or if immense amounts of it are being ignored. To attempt to track down all the applicable data the Internet has to bring to the table, a web crawler bot will begin with a specific arrangement of known site pages and afterward follow hyperlinks from those pages to different pages, follow hyperlinks from those different pages to extra pages, etc.

It is obscure the amount of the freely accessible Internet is really crawled via web search tool bots. A few sources gauge that solitary 40-70% of the Internet is listed for search – and that is billions of webpages.

WHAT IS SEARCH INDEXING.?

Search indexing resembles making a library card index for the Internet with the goal that a web search tool knows where on the Internet to recover data when an individual looks for it. It can likewise measure up to the list in the rear of a book, which records every one of the spots in the book where a specific subject or expression is referenced.

Indexing centers generally around the content that shows up on the page, and on the metadata* about the page that clients don’t see. At the point when most web search tools file a page, they add every one of the words on the page to the record – with the exception of words like “a,” “an,” and “the” for Google’s situation. At the point when clients look for those words, the web crawler goes through its list of the multitude of pages where those words show up and chooses the most applicable ones.

With regards to look through indexing, metadata is information that advises web crawlers about a website page. Frequently the meta description and meta title are what will show up on internet searcher results pages, rather than content from the page that is noticeable to clients.

HOW DOES WEB CRAWLERS WORK.?

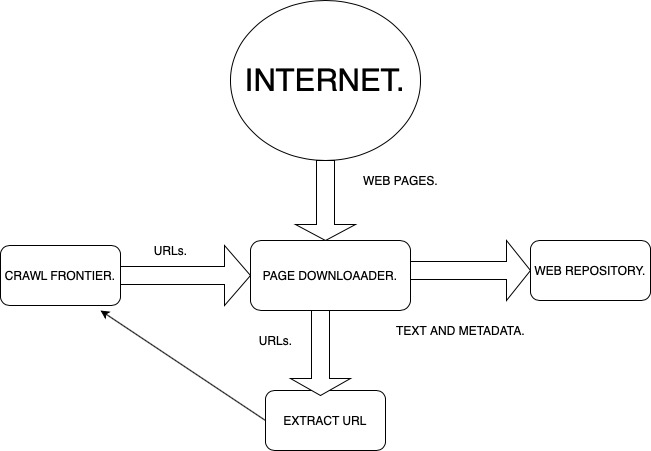

The Internet is continually changing and growing. Since it is beyond the realm of imagination to expect to know the number of absolute pages there are on the Internet, web crawler bots start from a seed, or a rundown of known URLs. They crawl the webpages at those URLs first. As they crawl those pages, they will discover hyperlinks to different URLs, and they add those to the rundown of pages to crawl next.

Given the immense number of site pages on the Internet that could be listed for search, this cycle could go on uncertainly. In any case, a web crawler will follow certain approaches that make it more specific about which pages to crawl, in what request to crawl them, and how frequently they should crawl them again to check for content updates.

The overall significance of every page: Most web crawlers don’t crawl the whole freely accessible Internet and aren’t expected to; rather they choose which pages to crawl originally dependent on the quantity of different pages that connect to that page, the measure of guests that page gets, and different variables that mean the page’s probability of containing significant data.

The thought is that a website page that is referred to by a ton of different webpages and gets a great deal of visitors is probably going to contain top caliber, definitive data, so it’s particularly significant that a web search tool has it recorded – similarly as a library would make a point to keep a lot of copies of a book that gets looked at by lots of individuals.

REVISITING WEBPAGES: Content on the Web is ceaselessly being refreshed, eliminated, or moved to new areas. Web crawlers will intermittently have to return to pages to ensure the most recent variant of the substance is recorded.

ROBOTS.txt REQUIREMENTS: Web crawlers likewise choose which pages to CRAWL dependent on the robots.txt protocol (otherwise called the robots exclusion protocol). Prior to crawling page, they will check the robots.txt document facilitated by that page’s web server. A robots.txt document is a book record that indicates the standards for any bots getting to the facilitated site or application. These guidelines characterize which pages the bots can crawl, and which joins they can follow.Every one of these variables are weighted differently inside the restrictive algorithms that each web index incorporates into their spider bots. Web crawlers from various web search tools will carry on somewhat in an unexpected way, although the ultimate objective

WHY ARE WEB CRAWLERS CALLED SPIDERS.?

The Internet, or if nothing else the part that most clients access, is otherwise called the World Wide Web – truth be told that is the place where the “www” part of most site URLs comes from. It was simply normal to call internet searcher bots “spiders,” since they crawl everywhere on the Web, similarly as genuine insects crawl on spiderwebs.

What is the difference between web crawling and web scraping?

Web scraping, information scraping, or content scraping is the point at which a bot downloads the content on a site without consent, frequently with the goal of utilizing that content for a malicious reason.

Web scraping is normally significantly more focused than web crawling. Web scrapers might be after specific pages or specific sites just, while web crawlers will continue following connections and crawling pages constantly.

Likewise, web scraper bots may ignore the strain they put on web servers, while web crawlers, particularly those from significant web search engines, will submit to the robots.txt file and breaking point their solicitations so as not to overburden the web server.

How do web crawlers affect SEO?

SEO stands for search engine optimization and it is the order of preparing content for search ordering, so a site appears higher in search engine results.

If spider bots don’t crawl a site, at that point it can’t be listed, and it will not appear in search items. Consequently, if a website owner needs to get natural traffic from query items, it is vital that they don’t hinder web crawler bots.

What web crawler bots are active on the Internet?

The bots from the major search engines are called:

. Google: Googlebot (actually two crawlers, Googlebot Desktop and Googlebot Mobile, for desktop and mobile searches)

. Bing: Bingbot

. Yandex (Russian search engine): Yandex Bot

. Baidu (Chinese search engine): Baidu Spider

There are likewise a lot more uncommon web crawler bots, some of which aren’t related with any internet searcher.